Bridging the Gap Between Large Models and Atomic Modeling

Large atomic models (LAMs) have undergone remarkable progress recently, emerging as universal or fundamental representations of the potential energy surface defined by the first-principles calculations of atomic systems. However, our understanding of the extent to which these models achieve true universality, as well as their comparative performance across different models, remains limited. This gap is largely due to the lack of comprehensive benchmarks capable of evaluating the effectiveness of LAMs as approximations to the universal potential energy surface.

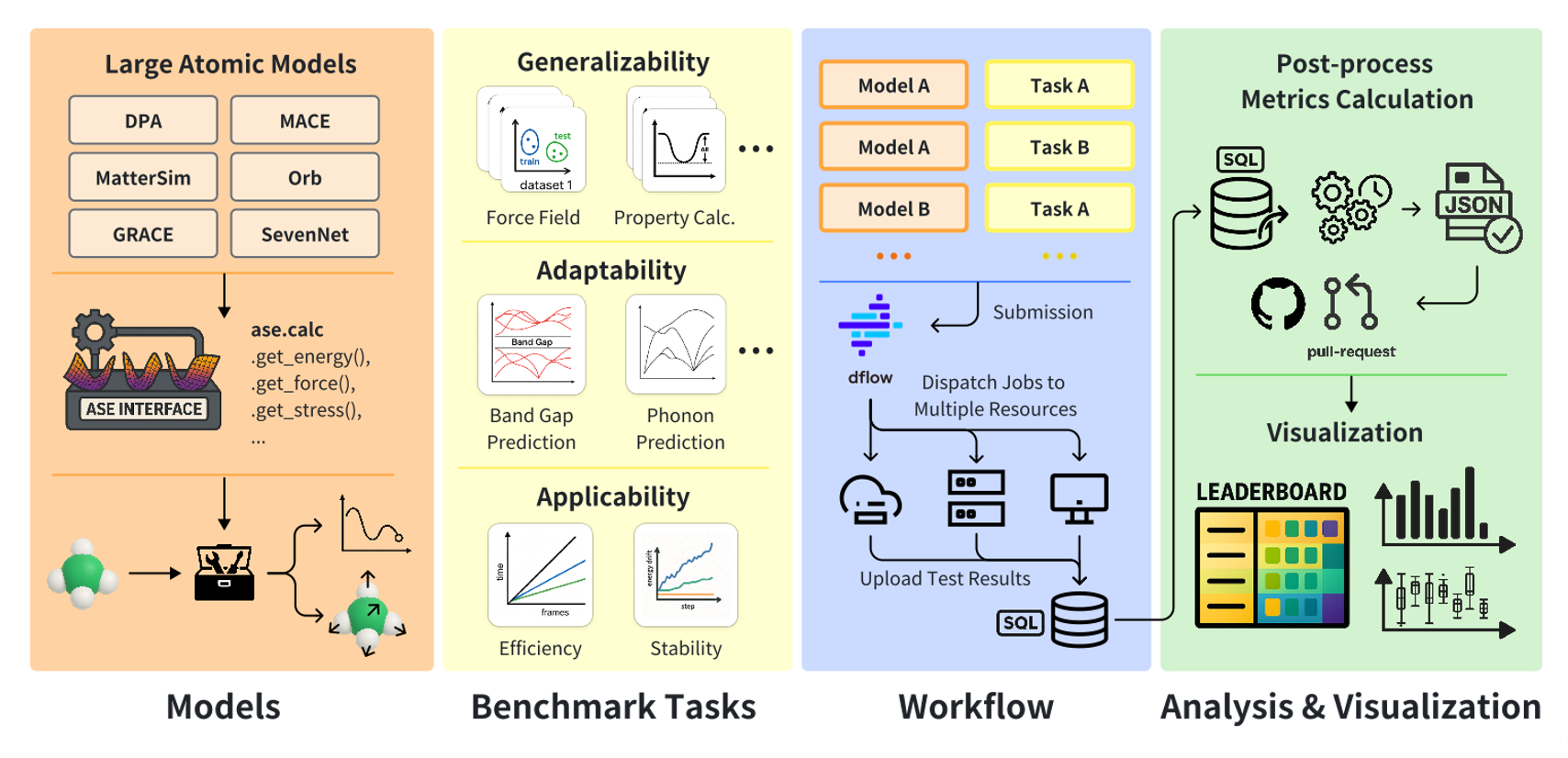

To bridge this gap, we introduce LAMBench, a benchmarking system designed to evaluate LAMs in terms of their generalizability, adaptability, and applicability— attributes crucial for deploying LAMs as ready-to-use tools across diverse scientific discovery contexts. Generalizability pertains to the accuracy of an LAM when utilized as a universal potential across a diverse range of atomic systems. Adaptability denotes the LAM’s capacity to be fine-tuned for tasks beyond potential energy prediction, with particular emphasis on structure-property relationship tasks. Applicability concerns the stability and efficiency of deploying LAMs in real-world simulations.

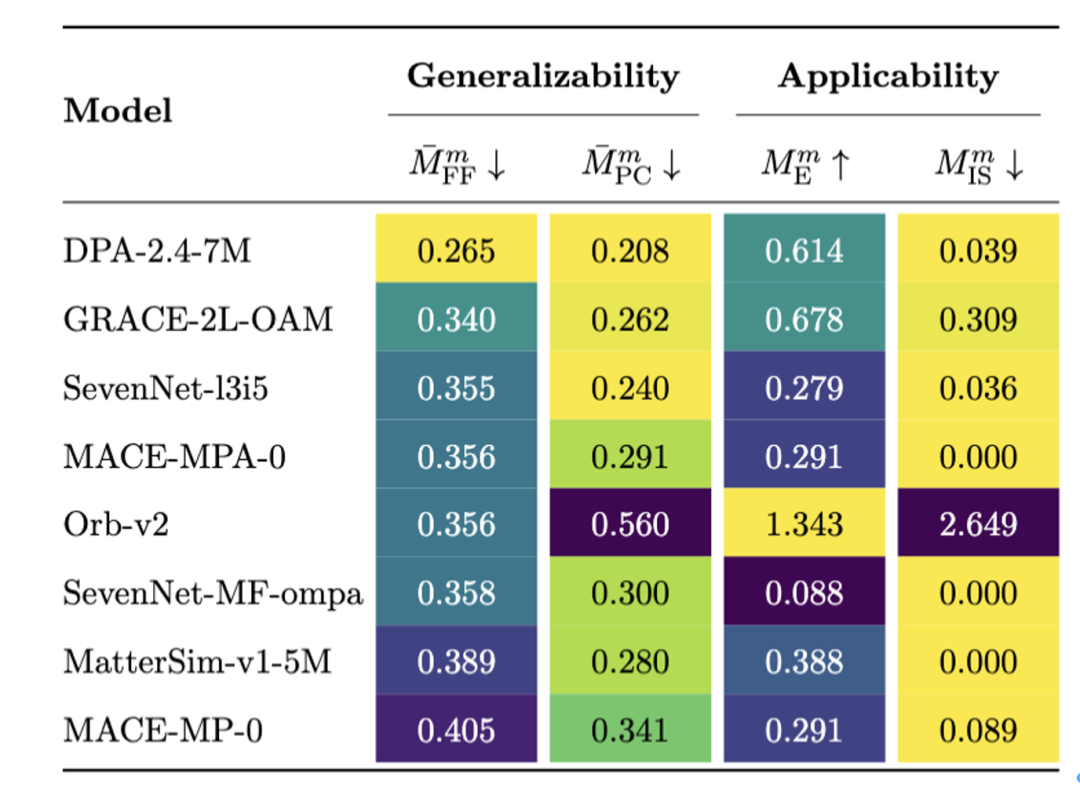

LAMBench adopts a highly modularized design,equipped with automation integral to task calculation, result aggregation, analysis, and visualization. Using LAMBench, we benchmarked 8 state-of-the-art LAMs. These models are compared based on their generalizaibility errors on force filed prediction tasks(M-mFF) and on properties calculation tasks(M-mPC).These error metrics are designed such that a dummy model has an error of 1 while a perfect model aligns with DFT labels achieves a metric of 0. Among the LAMs tested, DPA-2.4-7M demonstrated superior generalizability, owing to its multi-task multi-fedility training strategy. For applicability, we define the efficiency metric(MmE) and the instability metric(MmIS). A larger efficiency metric indicates higher efficiency, and a lower instability metric signifies greater stability. While achieving superior generalizability, DPA-2.4-7M also demonstrate decent stability and excellent efficiency among conservative models.

These results highlight the need for incorporating cross-domain training data, supporting multi-fidelity modeling, and ensuring the models’ conservativeness and differentiability. As a dynamic and extensible platform, LAMBench is intended to continuously evolve, thereby facilitating the development of robust and generalizable LAMs capable of significantly advancing scientific research.

Detailed information can be found at

https://github.com/deepmodeling/LAMBench

https://www.aissquare.com/openlam?tab=Benchmark

https://arxiv.org/pdf/2504.19578