The cloud-native AI4Science workflow framework Dflow | Enhances the development of scientific computing software and the OpenLAM model

From the development of software ecosystems in fields such as electronic structure calculations and molecular dynamics, to the systematic evaluation of large models like OpenLAM, and gradually addressing scientific and industrial R&D problems such as biological simulations, drug design, and molecular property prediction, a series of AI4Science scientific computing software and models are rapidly advancing. This progress is closely linked to better research infrastructure, with the Dflow project being a key component.

AI advancements are driving a paradigm shift in scientific research, but the integration of scientific computing and AI faces challenges, such as the complex processes of efficient data generation and model training, and the management and scheduling of large-scale tasks. Traditional manual task management is inefficient, and script-based automation lacks reusability and maintainability. The rapid scalability of cloud computing offers new opportunities for scientific research, necessitating a new, user-friendly workflow framework that can effectively utilize cloud and high-performance computing resources, enabling seamless integration of algorithm design and practical application.

In this context, the DeepModeling community initiated and gradually improved Dflow, a Python toolkit designed to help scientists build workflows. The core features of Dflow include:

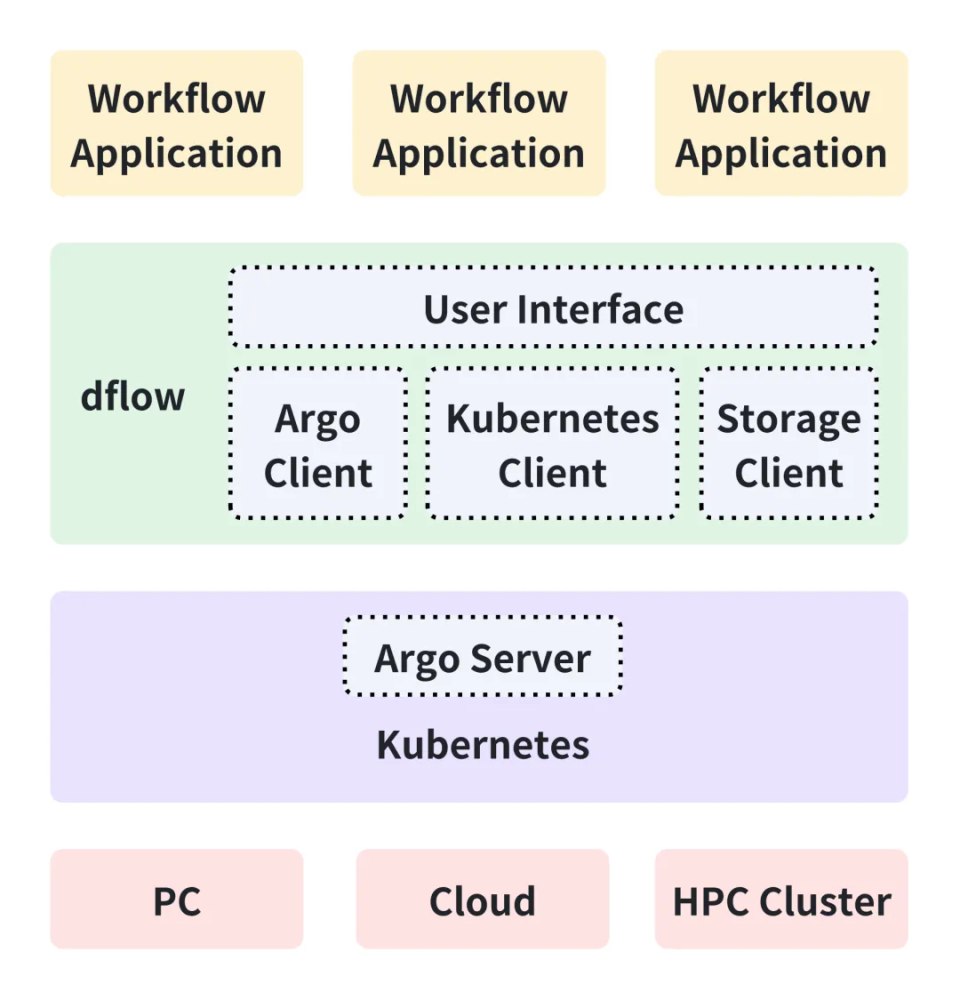

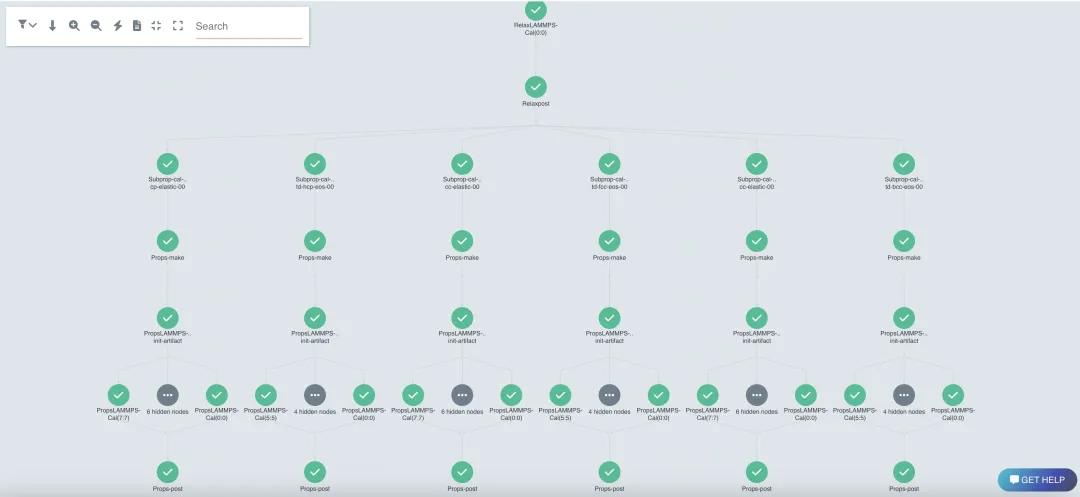

Enabling complex process control and task scheduling. Dflow integrates Argo Workflows for reliable scheduling and task management, uses container technology to ensure environmental consistency and reproducibility, and employs Kubernetes technology to enhance workflow stability and observability, capable of handling workflows with thousands of concurrent nodes.

Adapting to various environments and supporting distributed and heterogeneous infrastructure. Dflow services can run on both single machines and large Kubernetes clusters based on the cloud. Additionally, Dflow easily interfaces with high-performance computing (HPC) environments and the Bohrium® cloud computing platform specifically designed for scientific research, making it suitable for diverse computing needs.

Offering simple interfaces, flexible rules, and local debugging support. Before workflow submission, exception handling and fault tolerance strategies can be set. Dflow also provides a flexible restart mechanism, allowing seamlessly integrating previously completed steps into new workflows. Dflow's debug mode supports running workflows locally without using containers, offering an experience consistent with Argo Workflows.

The diagram below illustrates Dflow within the AI for Science computing framework:

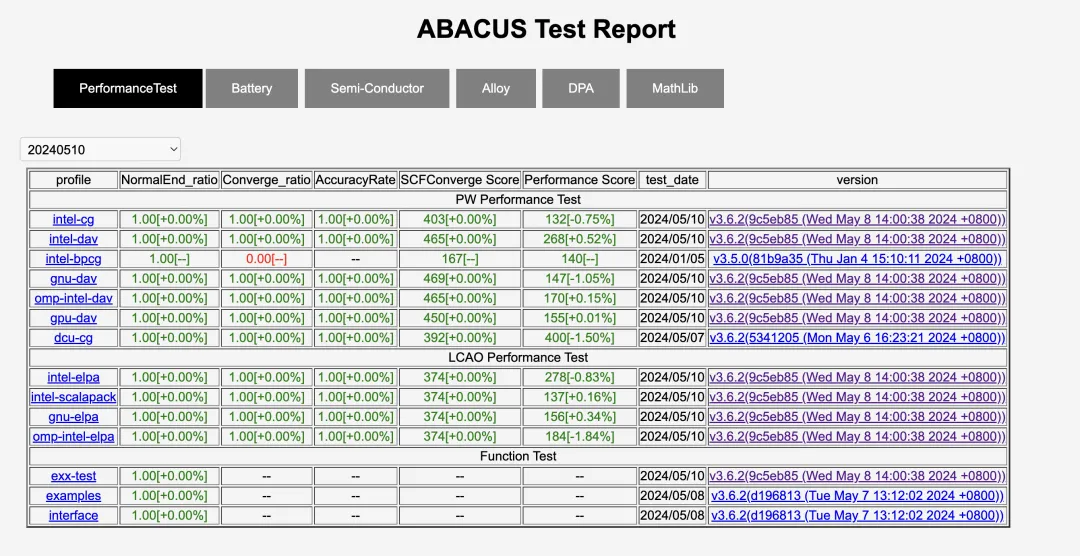

So far, many workflow projects have been developed based on Dflow, covering various fields from electronic structure calculations and molecular dynamics to biological simulations, drug design, material property predictions, and automated software testing. Representative open-source software includes:

APEX: Workflow for alloy property calculations.

DPGEN2: Second-generation DP potential generator.

Rid-kit: Enhanced dynamics toolkit.

DeePKS-flow: Building machine learning density functionals.

VSW: Virtual screening workflow in drug design.

ABACUS: Automated evaluation workflow.

Dflow-galaxy: Series of tools and workflows based on Dflow and ai2-kit.

ir-flow: Spectral calculation workflow.

ThermalConductivity-Workflow: Thermal conductivity calculation workflow.

There are also various industrial application software or computing platforms developed based on the Dflow framework, achieving systematic progress.

These extensive applications demonstrate the openness and scalability of Dflow. Additionally, the basic units in Dflow (called OPs) can be reused across different workflow applications. The Dflow ecosystem has accumulated a set of reusable OPs, such as the collection related to first-principles calculations—the FPOP project (Project link: https://github.com/deepmodeling/fpop). These components not only facilitate the development of complex workflows within the scientific community but also provide researchers with a collaborative environment to share best practices and innovations. The community ecosystem, driven by an open and scalable architecture, keeps Dflow at the forefront of workflow management, providing robust support for the evolving needs of the scientific and technological community.

In previous versions, the requirement to set up an Argo server posed a challenge for some novice users. Subsequently, the core developers of Dflow, based on the Bohrium research cloud platform, provided users with an out-of-the-box workflow middleware service (https://workflows.deepmodeling.com/), along with a beginner's guide based on Bohrium Notebook.

notebook link: https://bohrium.dp.tech/notebooks/1211442075

If you are also interested in developing an efficient, user-friendly, and automated workflow in the AI4Science era, you are welcome to embrace Dflow.

[1] https://github.com/deepmodeling/dflow

[2] https://arxiv.org/abs/2404.18392